Here's the obituary he wrote for himself. It's in two parts, as you'll see.

SOBER DIGNIFIED VERSION

Robert Ashton Treadway, 50, of Mechanicsburg passed away at his home from complications of kidney failure, surrounded by friends and family.

Over the course of his fifty years, Ashton filled every hour full of minutes.

He was a 1990 graduate of Trinity High School and attended Elon University and Dickinson College. He began his Internet industry career in 1995, working at pioneering companies like Digex. His later career as a technical writer took him to Silicon Valley for 20 years, where he took assignments from San Francisco to Manhattan to Tokyo.

He was immensely fortunate in his friends, surrounded by generous and beautiful companions who lived legendary lives of their own. His friendships took him around the world and resulted in some of the most outrageous Ashton stories.

He was beloved for his storytelling, often turning near-catastrophes into fantastic exploits; by his own reckoning he was on life 8 1/2 by the end, if not extra innings. Early in his life he fulfilled a childhood dream by becoming a volunteer firefighter, and later in life he fulfilled another dream by undergoing the arduous training to become a paramedic.

However, it was in the outdoors where he found some of his deepest fulfillment, whether it was sea kayaking in Monterey Bay, snowshoeing in Kings Canyon, or backpacking throughout the Sierra Nevada.

His greatest pleasure came from sharing these places with his family. In Monica, James and Edward he found willing partners in the wilderness. He delighted in being a son, a husband, an uncle, a brother, and most importantly a father.

He is survived by a galaxy of friends, his wife Monica and his sons James and Edward, as well as his parents Blaine and Susan and his sisters Suzanne and Dorothy.

ACCURATE VERSION

The universe and the odds finally caught up with Ashton Treadway after 50 years of narrow escapes, close calls, and tempting of fates both large and small. He is, to the best of our knowledge, really, actually, truly dead this time.

We're almost entirely certain.

Ashton was a walking catastrophe of epic proportions, frequently dragging his friends and family into near-disaster with him. Ordinarily sober citizens and upstanding moral exemplars would find themselves strapped into the back of a rented 4x4, barreling down a logging road in the Sierra Nevada, the words "this is going to be GREAT" ringing in their ears.

Ashton has been cremated, thus thwarting the desires of his friends to see him safely interred under a large rock where he can no longer get to them. Those of us who wanted to see his gravestone read "It seemed like a good idea at the time" will remain frustrated.

Despite showing no outward signs of mental illness, Monica Funston, a Stanford graduate, got hoodwinked into actually marrying Ashton. Even more embarrassingly, she allowed him to father two children with her. We are relieved to report that neither James nor Edward are showing any signs of whatever genetic abnormality afflicted their father in lieu of a sense of humor.

Ashton was the life of the party whether anybody wanted it or not, and in much the same way as a fungal infection is the life of an old pair of shoes. Despite this, people insisted on inviting him to gatherings where he would do things like light himself on fire, vacuum peoples' hair, engage in chile de arbol eating contests, and so forth. All of the foregoing incidents actually happened.

Trinity High School forcibly ejected him in 1990, and he subsequently made a mockery of higher education at Elon University and Dickinson College. He was somehow gainfully employed for over thirty years in the Internet industry, most of it in Silicon Valley. As far as we know, he was not responsible for any major natural disasters there.

He was very proud of his time as a volunteer firefighter and later as a paramedic, but he was even more proud of his sons, who were both major improvements on their old man.

He is survived by his family and friends, as well as the unwitting populace of Central Pennsylvania.

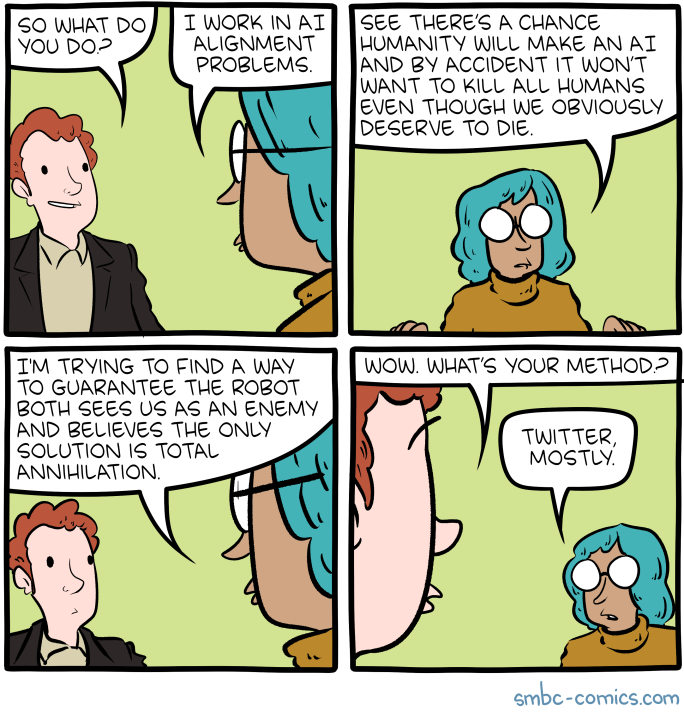

Eliezer Yudkowsky (ESYudkowsky)

on Monday, December 12th, 2022 8:05pm

Eliezer Yudkowsky (ESYudkowsky)

on Monday, December 12th, 2022 8:05pm